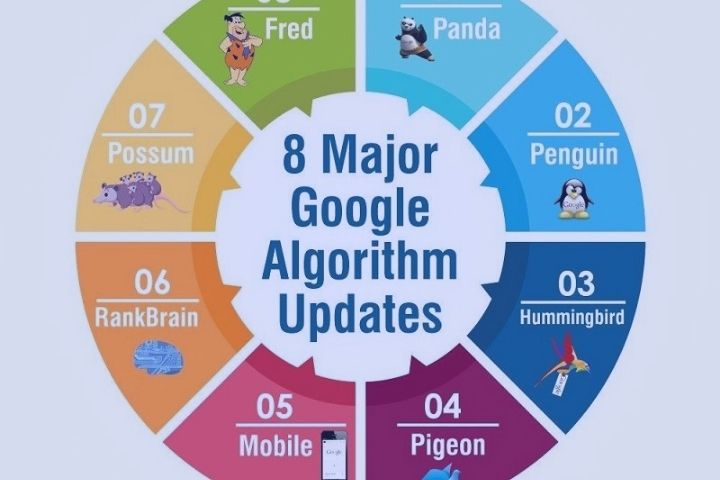

What Are The Most Important Google Algorithms?

When people refer to the process by which Google ranks websites in search results, they refer to it as the “Google algorithm.” There are hundreds of articles on the Internet that try to explain how the Google algorithms works. The most obvious flaw in many of them is that there is no single algorithm.

Google search ranking is a collection of many algorithms. Even Google’s explanation of how search works refer to plural algorithms.

The Algorithm is defined as an “ordered and finite set of operations that allows finding the solution to a problem.” Since Google is obviously not designed for a human, the results are driven by its software that contains “algorithmic” instructions on how to understand what a user might be searching for. It can also be said as it is trying to understand how Google works in a simplistic algorithm ignores the nuances of how complex search rankings are.

Table of Contents

Indexing Algorithm

Indexing algorithm determines how to cache a web page and what database tags should be used to categorize it. This algorithms is based on the theories of library science since it archives Internet pages in specific databases. This is the algorithm that will determine if the URL will be included in the Google index.

This algorithm is the most complex of the three. It will decide if the content is too similar to another URL that you discovered on the Internet, if the website is spam or harmful in some way, or if its quality does not meet the standards for indexing because the content could be too thin or have too many ads. When a URL is determined to be too similar to another URL like duplicate or near duplicate, Google will decide which URL is best for indexing. The indexing algorithm will decide whether or not to trust the content based on the technical SEO signals of the page.

Discovery Algorithm

This algorithm should not be confused with Google Discover, the source of traffic coming from Android devices. It is the algorithm that crawls the web to identify new pages and sites that Google has not previously indexed. It is quite a strong algorithm in the tools it uses to discover new URLs.

It also relies on many different sources, including naked URLs like they are simply pasted into the text without being clickable. The XML sitemaps, Google Analytics, Chrome, and of course URLs submitted to Google via Google Search Console. This algorithms simply looks up URLs and compares them to known URLs.

When this algorithm finds a new URL that is not yet listed, it queues it for future crawling. Apart from adding URLs to crawl, Discovery does not judge the quality of the content of each Link.

Tracking Algorithm

It is designed to track and understand the entire web. Once a URL is discovered, Google has to decide whether it wants to spend the necessary resources to crawl the URL. You will use many factors to help with this decision, including the number of links that point to the URL, the domain authority of the URL, where you discovered the URL, and the newsworthiness of the URL. For example, a URL that you discover from a Twitter feed with multiple mentions may score higher and is, therefore, more likely to be crawled.

This algorithm is the same as that of the Discovery algorithm, this algorithm has a single purpose: it will only crawl a page, it will not evaluate the quality of the content.

Classification Algorithm

The other one is Classification algorithm. The ranking uses the information from the above three algorithms to apply a ranking methodology to each page that establishes what we know as rankings. Once Google has accepted a URL into its index, it uses traditional library science to categorize the page for future rankings.

The tracking does not guarantee that something will be indexed but indexing does not necessarily mean that something will get traffic and be classified in search results. Ranking scores drive the relative positions of the pages that are displayed across multiple queries.